18-02-2026

Sync has moved

The Sync project is now hosted on its dedicated platform: www.syncspatialmusic.com

All updates, documentation, and future developments will be published there.

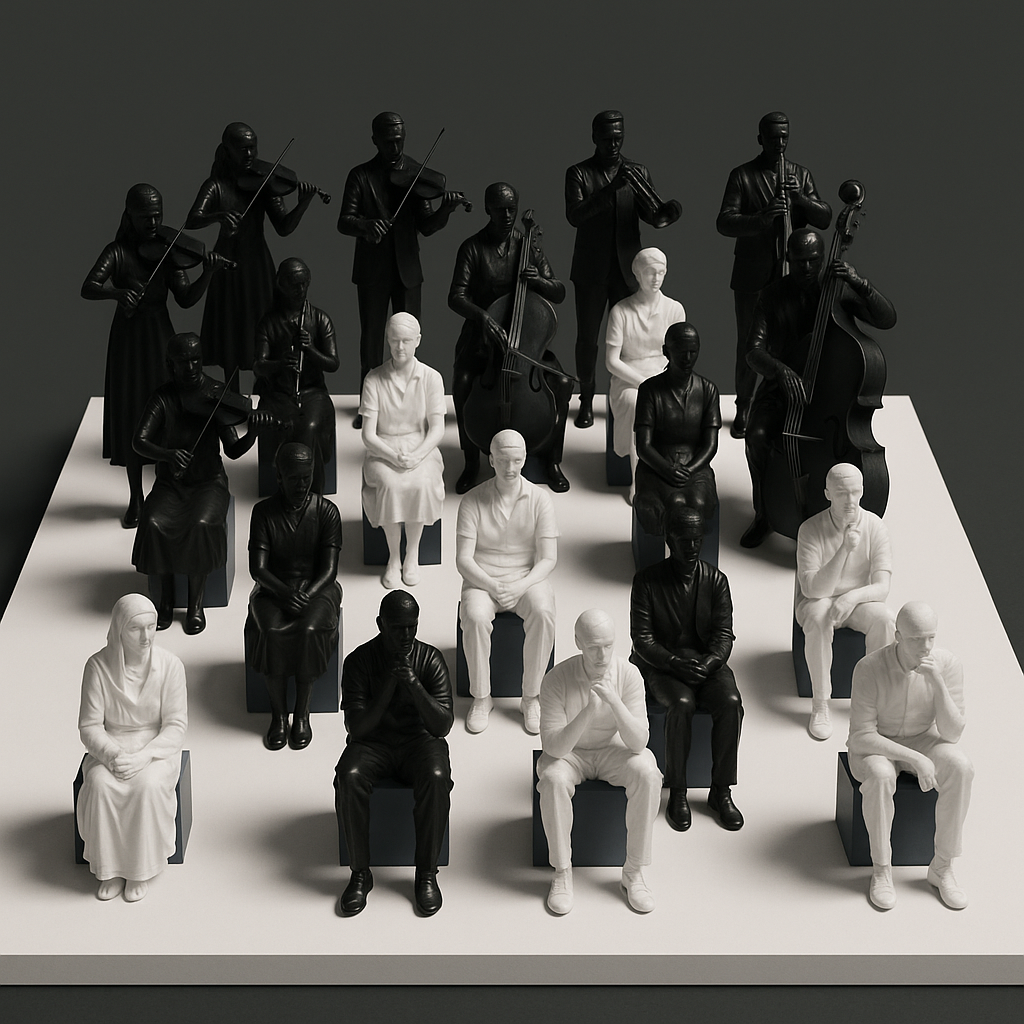

Sync is a distributed system for composing and performing music in real time as a spatial experience.

It enables evolving instructions to flow across a network of performers.

Mobile-based performer interface

Designed to scale from 5 to 100+ performers

Supports real-time triggering & latency control

Integrates with Max for Live & Ableton

Spatial harmony & motion logic

Supports generative, rule-based, & algorithmic composition

Each performer becomes a node in a distributed network, receiving individualized instructions in real time.

These instructions can be deterministic or rule-based, enabling both precision and variation. The audience is immersed inside the system, surrounded by sound that unfolds dynamically, shaped by proximity, spatial relations, and performer interaction.

Step inside the system.

Move your cursor across a virtual grid of 25 performers and hear how sound shifts and blends in space.

It’s nothing like being surrounded by real musicians in the same room, but it offers a glimpse of how exciting that experience could be.

Sync deploys many outputs simultaneously.

Performers are arranged in a grid or network, each receiving individualized instructions via a mobile interface.

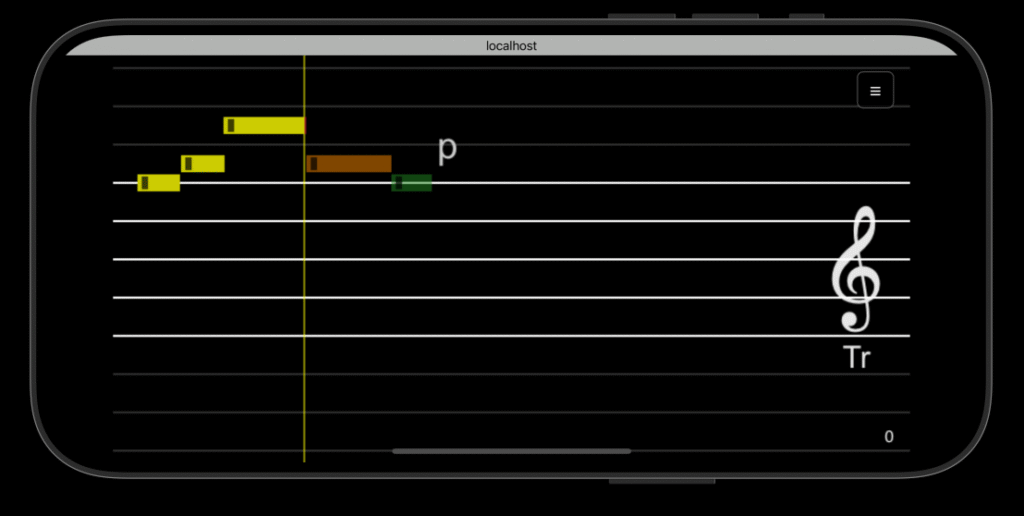

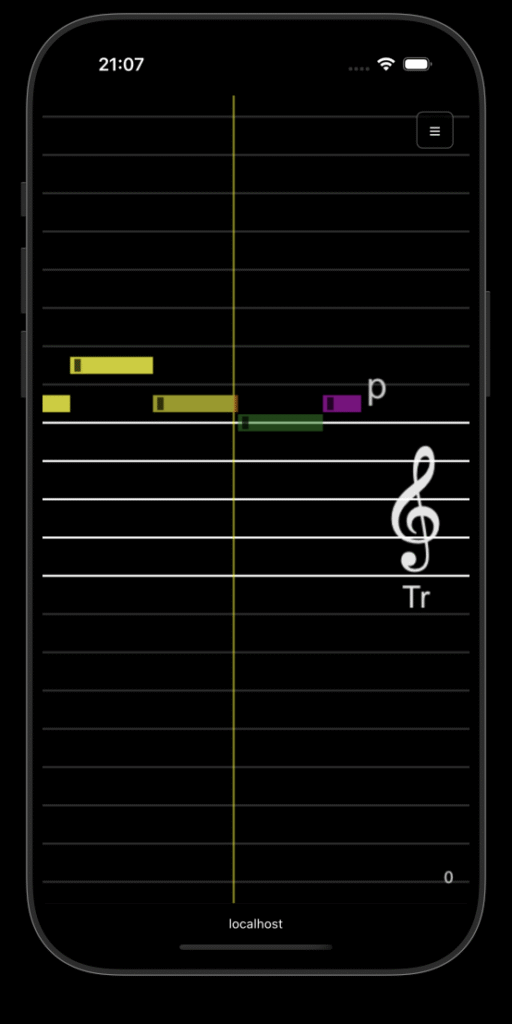

The platform includes a mobile-first user interface that adapts to any screen size.

It features a robust clef and note positioning engine supporting nine clef types (Treble, Alto, Bass, Soprano, Mezzo-soprano, Tenor, Baritone, Treble 8vb, Sub-bass) with accurate pitch placement across a 44-semitone range, covering instruments such as piano, clarinet, flute, bassoon, and horn. Dynamics from pianissimo to fortissimo are supported. A choral lyrics system delivers syllable-to-note text with hyphenation and melisma handling, routes lines to S, A, T, B parts, and keeps all devices in sync in real time.

Sync is a tool for designing music as a living architecture.

Shaped by flow, transformation, and interaction.

Musical systems can be composed using various structural models.

Fractal logic enables recursive development across multiple scales, graph networks define custom topologies and node relationships, cellular automata allow behaviors to evolve based on local conditions, and matrix operations shift musical values dynamically in response to systemic rules.

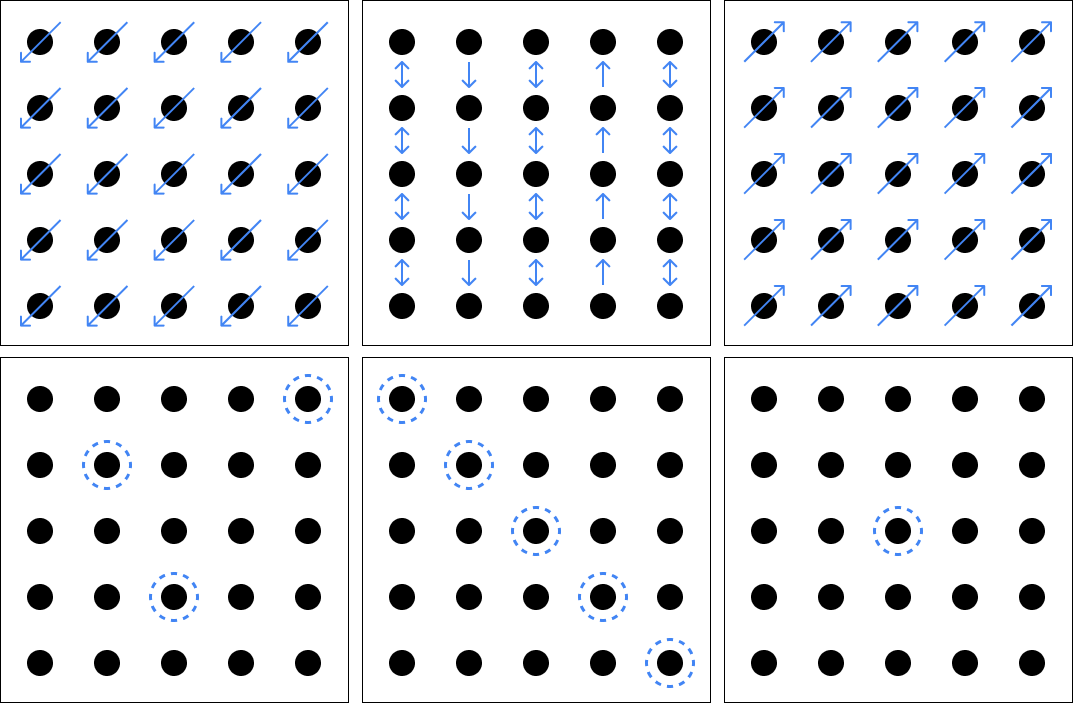

The Dashboard is the central interface for monitoring performer activity.

It offers real-time, high-level visualization of a distributed performer network, enabling the design of spatial music systems that unfold in both time and space.

Key features include:

- Performer Grid View: Displays all connected performers in a visual grid.

- Spatial Mapping: Show how instructions flow through the network, enabling design of musical structures that rotate, ripple, or shift across the performer grid, key techniques in 3D musical composition.

- Latency Analysis & Sync Timing: Real-time sync diagnostics help understand performers responsiveness.

- System Stability & Logging: Debug tools and logging for performance analysis.

- Performer Layout Adaptation: Allows re-arranging performer positions to match the physical layout of the venue.

In Sync, harmony is a spatial phenomenon.

Harmonic relationships change with proximity and distribution.

Each performer acts as a localized emitter of sound, and their arrangement in space forms harmonic relationships.

This spatial harmonic relationship is best understood via the metaphor of additive color mixing. Just as overlapping light sources blend into new colors depending on their hue and intensity, overlapping musical voices in Sync create perceptual mixtures. A major triad formed by three surrounding nodes might feel radiant and stable, while a suspended or dissonant set produces tension that varies depending on the listener’s position within the field.

Space as an active dimension of composition.

Towards a systemic approach to Music in motion.

Top Row: Performers execute directional movements: rotations, vertical shifts, and diagonal gestures.

Bottom Row: Highlighted circles indicate active roles or featured sonic gestures, shifting focal points across the group.

Movement can be expressed in many ways. A melodic figure can travel horizontally across a row of performers, rotate around the audience, or ripple diagonally through the grid. A harmonic field can shift as one node drops out and another takes its place. Even silence can move, a rest traveling through the grid like a shadow. This introduces a new kind of listening based on trajectory, perspective, and spatial memory. Because each node is independent, motion becomes a property of the system: a function of sequence, interaction, or displacement.

Prototype Performances.

Early tests of the Sync dashboard and distributed sound.

First improvisation

A minimal pitch set was used, focusing on spatial patterns and the interplay between structure and improvisation.

Just for context: the video on the website is a screen recording of the first live test improvisation with the Sync system. It was created purely to verify that the communication and timing were functioning correctly. What you see is the Dashboard interface — each highlighted circle represents a performer’s activity, not the musical content itself. It is only a technical trial, not a performance.

Voices on 8 Notes

On the left, the Sync Dashboard shows live performer activity: each circle represents one performer, and when it turns yellow, that performer is active. The visualization offers a bird’s-eye view of how individual contributions form the ensemble’s overall texture. On the right, four mobile interfaces simulate what performers D2, D8, D24, and D3 see on their screens. Each display features a floating pentagram with personalized notes—unique visual scores that guide each performer’s timing and pitch.

Voices on 8 Notes explores how a simple harmonic framework can generate complex collective behavior. Using only eight notes, this piece was composed and performed within Sync.

Inspired by Debussy’s “Nuages”

On the left side of the screen, the Sync dashboard displays real-time performer activity. Each circle represents a performer; when it turns yellow, the performer is active, offering a bird’s-eye view of the ensemble’s dynamics.

On the right, three mobile phone screens simulate the performer interfaces for positions D0, D7, and D23. Each interface shows a floating pentagram with personalized notes, a direct representation of what each performer sees on their device.

This screen recording presents a live improvisation using the Sync system, inspired by Debussy’s Nuages. The performance blends free improvisation with looped patterns. It is only a technical trial, not a performance.

Automata w37d6

On the left side of the screen, the Sync dashboard displays real-time performer activity. Each circle represents a performer; when it turns yellow, the performer is active, offering a bird’s-eye view of the ensemble’s dynamics.

On the right, three mobile phone screens simulate the performer interfaces for positions D0 and D13. Each interface shows a floating pentagram with personalized notes, a direct representation of what each performer sees on their device.

Automata w37d6 takes inspiration from cellular automata, systems where simple local rules give rise to complex global patterns. This screen recording captures an early Sync prototype performance, exploring how distributed instructions ripple across a network of performers like evolving cells in a grid. Stay until the end, where the system really takes off and the patterns go a bit wild.

Keywords

Distributed Composition, Networked Performance, Spatial Sound, Generative Systems, Emergence, Real-time Music, Systemic Music, Algorithmic Composition

For more content visit: